So you've got a workload that absolutely needs to run in a Windows environment, and you're also constrained to run this inside an existing Kubernetes cluster (likely with only Linux nodes).

We recently encountered a project where this was necessary, and encountered a few rough edges.

Before beginning, make sure the following is true:

1. Your workload can run on Windows Server 2019 or Windows Server 2022. These are the only supported Windows OS for Kubernetes Nodes, and the Windows version of each pod must match the Windows version of the node

2. You don't plan to leverage Hyper-V isolation (unless you already know you need this, you probably don't)

3. You're using Kubernetes 1.25 or later (Windows support has been 'official' since much earlier, but YMMV)

4. You have access to a Windows license for the image you wish to use (this is 'baked in' to the per hour instance price for AWS EC2 instances, so there's no need to deal with licenses)

5. You are using a self-managed Kubernetes cluster (if you're using EKS or similar, there are images that should simplify this process even further)

6. You're using CoreDNS for DNS resolution (may work otherwise, but YMMV)

Prep the Node

1. Spin up a Windows instance (EC2 or otherwise) with RDP access, with ~120GB+ of disk space (approx).

2. Modify your firewall rules (security group on AWS) to allow ingress from port 3389 (so you can RDP into the instance. Feel free to restrict the CIDR block if you have/need to limit access from a specific bastion box IP, or 0.0.0.0/0 for public access).

3. RDP into the instance using the Administrator account, and follow this K8s Windows SIG Guide to installing ContainerD, kubelet, and kubeadm on the node, and join it to the cluster (can use the token approach, or copy a kubeconfig file to the Node and use `kubeadm join --discovery-file <your_file_on_the_host_node>`

Major Note: Ensure ContainerD is version 1.7.16 or later, as earlier versions have a bug in the path used for Windows HostProcess container roots, which we'll be using in later steps

At this point, the node should be visible in your cluster (confirm via kubectl/k9s). It should also be 'ready'. However, we still need to set up networking between pods, and ensure that non-Windows pods do not get scheduled onto the node.

4. Taint the node. To avoid accidentally scheduling pods onto the new Windows node (as many DaemonSets and existing Helm charts don't account for the existence of Windows nodes), add a taint to the new Windows node, e.g.:

kubectl taint nodes my-windows-node-name os=windows:NoSchedule

Without this taint, you'll likely observe pods being scheduled to the Windows node (metrics, Loki, etc) that cannot run on it, and will remain in a crash loop.

Prepare Networking (Kube Proxy and Flannel)

This guide assumes you're using or planning to use Flannel. Otherwise, good luck! :).

If you have an existing Flannel deployment - modify the existing Flannel configmap so that the net-conf section looks like this:

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan",

"VNI" : 4096,

"Port": 4789

}

}You might accomplish this by modifying a Helm chart, if you deployed using the Flannel Helm chart (in which case, the values to set are `flannel.vni: 4096` and `flannel.backendPort: 4789`).

If you do *not* have an existing Flannel deployment, follow the "Prepare Control Plane for Flannel" section of this guide from the Windows SIG.

Once you have the basic flannel deployment set up, and the net-conf shows the updated VNI (4096) and Port (4789), its time to install Windows-specific kube-proxy and Flannel daemonsets into the cluster.

Windows-Specific kube-proxy and Flannel Setup

However, there is a problem - the remainder of the Windows SIG guide (as of June 2024) attempts to install using old versions of the Flannel HPC image, which encounter path issues when launching a container. There is an issue to address this, but in the meantime, a working image for Flannel v0.22.1 is available here.

So, continue to follow the Windows SIG guide under the "Add Windows flannel solution" section, but you'll also want to substitute the image for the working Flannel image from the 3rd-party repo ('jjmilburn') above, e.g.:

controlPlaneEndpoint=$(kubectl get configmap -n kube-system kube-proxy -o jsonpath="{.data['kubeconfig\.conf']}" | grep server: | sed 's/.*\:\/\///g') kubernetesServiceHost=$(echo $controlPlaneEndpoint | cut -d ":" -f 1) kubernetesServicePort=$(echo $controlPlaneEndpoint | cut -d ":" -f 2) curl -L https://raw.githubusercontent.com/kubernetes-sigs/sig-windows-tools/master/hostprocess/flannel/flanneld/flannel-overlay.yml | sed 's/FLANNEL_VERSION/v0.22.1/g’ | sed "s/KUBERNETES_SERVICE_HOST_VALUE/$kubernetesServiceHost/g" | sed "s/KUBERNETES_SERVICE_PORT_VALUE/$kubernetesServicePort/g" | sed "s/sigwindowstools/jjmilburn/g" | kubectl apply -f -

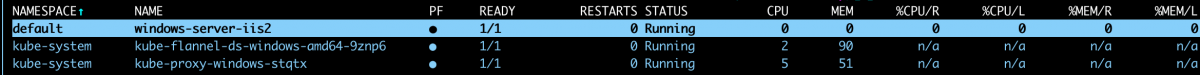

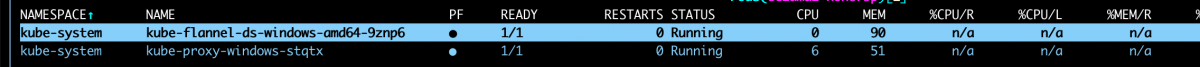

After applying this change and following the existing guidance from from the same document on kube-proxy install, the node should show both flannel and kube-proxy running, and no other pods:

Testing It Out

Finally, apply a simple pod CRD for a Windows instance, and ensure it's scheduled to the new Windows node. For example:

apiVersion: v1

kind: Pod

metadata:

name: windows-server-iis2

labels:

app: windows-server-iis2

spec:

containers:

- name: windows-server-iis2

image: mcr.microsoft.com/windows/servercore/iis:windowsservercore-ltsc2022

resources:

limits:

cpu: 1

memory: 800Mi

requests:

cpu: .1

memory: 300Mi

nodeSelector:

kubernetes.io/os: windows

tolerations:

- key: "os"

operator: "Equal"

value: "windows"

effect: "NoSchedule"After saving the above as 'test-win.yaml' running `kubectl apply -f test-win.yaml', we should see the pod running on the Windows node: