Tool use (or function calling) isn't new to LLMs, and the abstraction layers enabling wider tool use grow more accessible day by day. LangChain supports an LLM provider-independent way of exposing tools to agents:

@tool

def magic_function(input: int) -> int:

"""Applies a magic function to an input."""

return input + 2

tools = [magic_function]

agent = create_tool_calling_agent(model, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools)Frameworks like CrewAI offer a subset of built-in tools from LangChain, making it trivial to add functionality to one or more agents, e.g.

import os

from crewai import Agent, Task, Crew

# Importing crewAI tools

from crewai_tools import (

SerperDevTool,

WebsiteSearchTool

)

# Set up API keys

os.environ["SERPER_API_KEY"] = "Your Key" # serper.dev API key

os.environ["OPENAI_API_KEY"] = "Your Key"

# Instantiate tools

search_tool = SerperDevTool()

web_rag_tool = WebsiteSearchTool()

# Create agents

researcher = Agent(

role='Market Research Analyst',

goal='Provide up-to-date market analysis of the AI industry',

backstory='An expert analyst with a keen eye for market trends.',

tools=[search_tool, web_rag_tool],

verbose=True

)And just last week, OpenAI announced their Agents SDK, which provides a similar abstraction, where an "agent" (instead of a completion) is defined, and given access to tools it will use as needed to achieve a given goal:

from agents.tool import function_tool

@function_tool

def greet_user(context: AgentContextWrapper[MyContext], greeting: str) -> str:

user_id = context.agent_context.user_id

return f"Hello {user_id}, you said: {greeting}"

agent = Agent(

name="agent_with_tool",

tools=[greet_user],

)

Notice that in all of these cases, the paradigm is 'provide one or more tools to an agent', where an 'agent' is an LLM invoked in a loop to achieve a user-specified outcome (where in each pass of that loop, it might call a tool, generate an answer, or hand off control to another agent). And undoubtedly, this is powerful - agentic tool use powers OpenAI Operator, browser-use, Perplexity Deep Research, and similar use cases.

Thats all well and good - but the human is still on the hook for defining and exposing tools to the agent. What if the agent itself could automatically determine and generate the 'right' tools for a given job, moving the developer up another level of abstraction?

Advanced Tool Learning and Selection System (ATLASS)

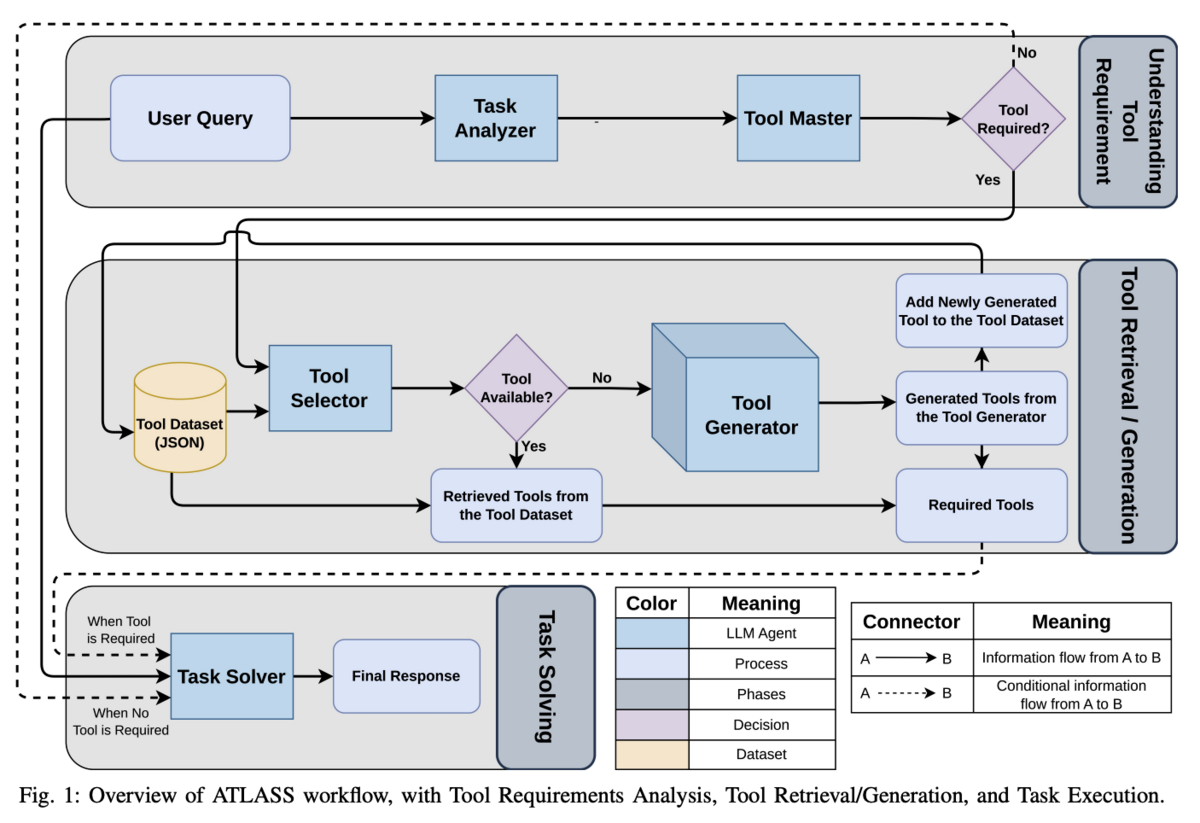

The recent ATLASS paper describes a process which realizes this higher level of tool-use autonomy, by walking through the following three high-level phases:

1. Determine what, if any, tools are required to accomplish a user query ("Understanding Tool Requirements")

2. Retrieve and/or generate the required tools ("Tool Retrieval/Generation")

3. Use the tools to solve the tasks represented by the user query ("Task Solving")

One key difference between this approach and other tool-generation frameworks like "LLMs as Tool Makers" is the introduction of web search (via SerpAPI) to incorporate live API updates for cloud-based tools.

The ATLASS contains multiple individual LLM agents, a multi-agent LLM block, and a tool database. How this all fits together is explored more in the sections below.

Phase 1: Understanding Tool Requirements

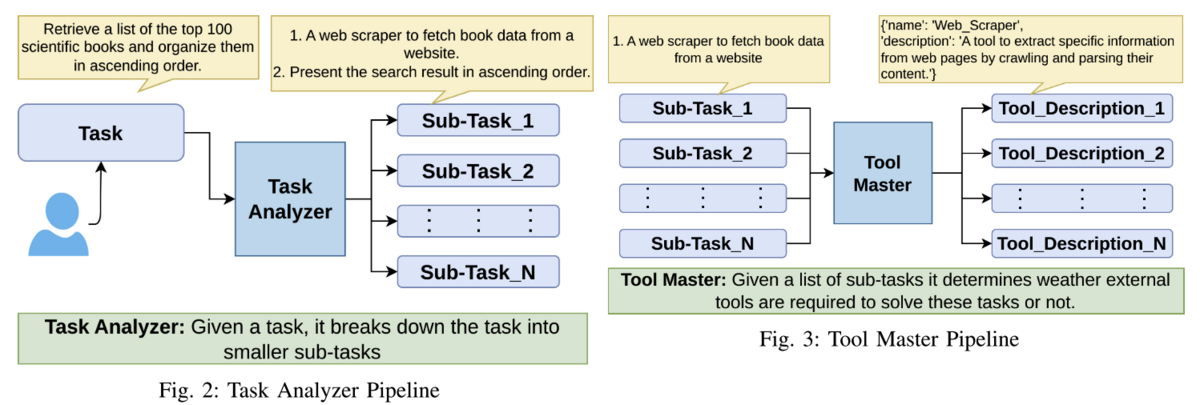

Here, the user query is analyzed by a "Task Analyzer" LLM agent to determine what tasks make up that query. For example, if a user asks to find the "top 100 scientific books", the Task Analyzer will decompose it into:

- A web scraper to fetch data from book data from a website

- Present the search results in ascending order

This list of tasks is then passed to the "Tool Master", which generates a list of tool names/descriptions that it believes are necessary to complete those tasks. In the '100 books' example from the paper, the Tool Master determines that it needs a web scraper:

[

{

"name": "Web_Scraper",

"description": "A tool to extract specific information from web pages by crawling their content"

}

]This process is visualized in Figures 2 and 3 from the paper, below:

In the event that no tool is required, the tool master will return no tools, and the user query / tasks will be passed directly to the Task Solver, skipping the entire second stage of "Tool Retrieval/Generation", and solving the task without any tools.

If there are tools that are required, the list of those tools (from the "Tool Master") and the subtasks (from the "Task Analyzer") are passed to the next phase, "Tool Retrieval/Generation".

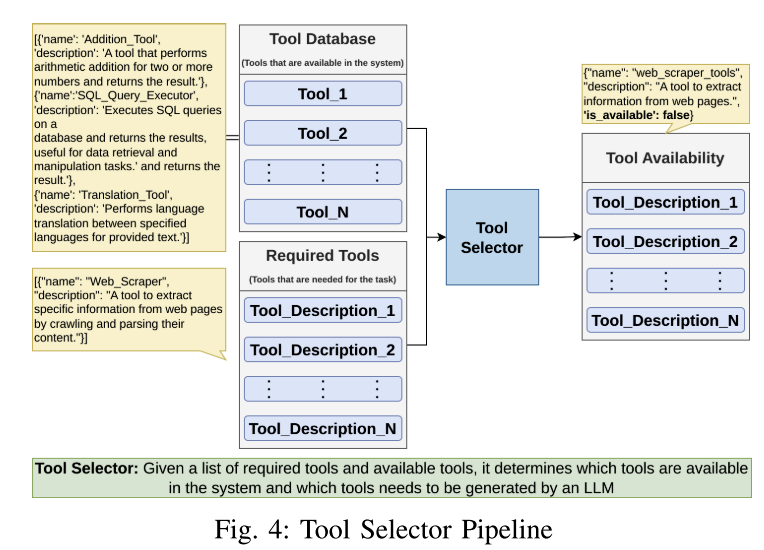

Phase 2: Tool Retrieval/Generation

The "Tool Selector" agent receives the list of "Required Tools" from Phase 1, as well as input from a database of existing tools (which is only available to agents within this second phase). The tool database contains a name, description, and function name for each tool - where the 'function name' is used to identify a matching file (in the local filesystem) that is the Python code implementation of the tool. Figure 4 illustrates this:

If the "Tool Selector" finds a similar/matching tool for all of the required tools from the existing tools database, it will return those retrieved tools as well as the user query to the third phase (Task Solving).

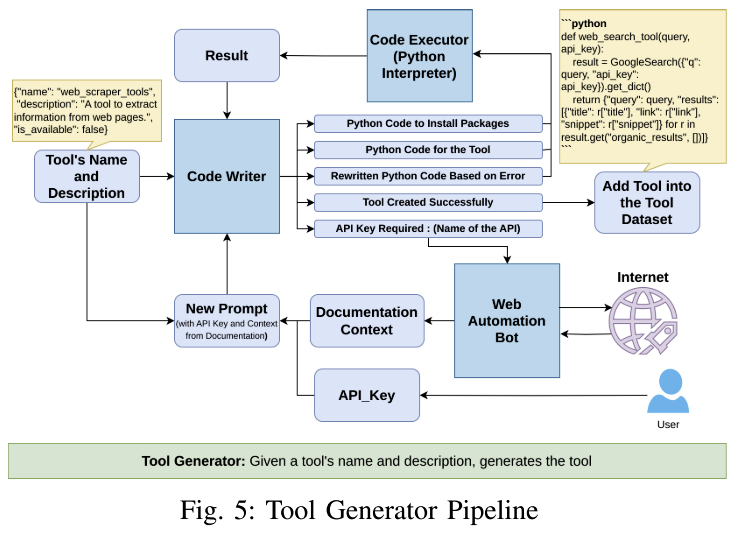

Otherwise, the "Tool Generator" agent is invoked, which contains three subagents:

- Code Writer

- Code Executor

- Web Automation Bot

The "Code Writer" subagent initially receives the tool list from the Tool Selector, and for each 'unavailable' tool, it attempts to write Python code to 'realize' the functionality of that tool. After writing a draft implementation, it passes control to the "Code Executor" subagent to verify that the code compiles and 'works as intended'. If an error occurs, the error and code is passed back to the "Code Writer", which revises it, and the process continues. If the tool can be created without using any API Keys for external services, the Web Automation Bot is not involved at all.

If, however, the tool requires an API Key (for example, realtime stock prices), the Code Writer agent will recognize this, and search for up-to-date documentation on an API that could be used to get the required information. At this point, the user is also prompted for an API Key for that service. Once the API Key is provided, the Code Writer takes the API key and the API documentation (from a web search) to generate a tool that uses the external API:

Phase 3: Task Solving

The task solving phase is fairly straightforward - the "Task Solver" agent has access to the user query and any tools (if tools were determined to be necessary and generated by Phase 2). The "Task Solver" agent then iteratively executes the tools provided to complete the tasks necessary to solve the users query, and eventually returns a result. A good mental model for the "Task Solver" is a 'traditional' agent like the ones described in the introduction to this blog - an LLM that executes in a loop, with access to a static list of tools. The heavy lifting to identify and generate those tools is done by the prior phases of the ATLASS framework.

What does it cost?

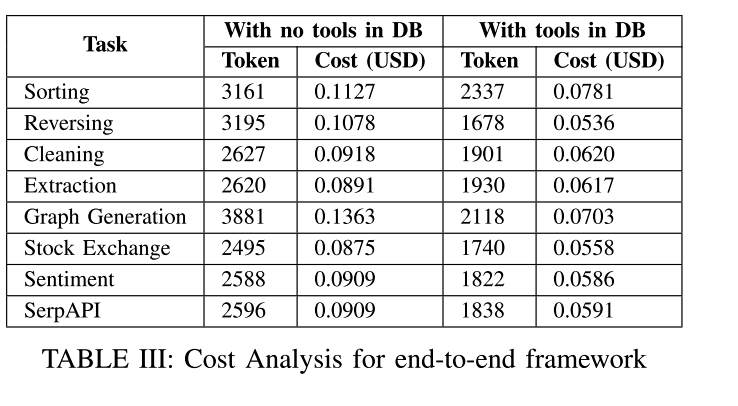

The authors specifically note that storing the generated tools in a database saves money, and share a table showing the cost savings of solving a user query with and without the relevant tools already in that tool database, using gpt-4o:

Not bad! For solving a user query after the tools are generated, the price for solving a user query goes to between 5-8 cents, and that price can likely be optimized further, as the authors used gpt-4-0613, a currently deprecated model which clocks in at $30.00/M input tokens, and $60.00/M output tokens - a price on par with using the latest o1 reasoning models. Simply switching to gpt-4o would bring the costs down to $2.50/M input tokens and $10.00/M output tokens, or under a penny per inference (at least 6x cheaper than the costs in their paper).